Master the Data Integration Process for Better Insights

- Matthew Amann

- May 25, 2025

- 14 min read

Mastering the Data Integration Fundamentals

At its core, data integration is the process of combining data from different sources into a single, unified view. This allows businesses to gain a complete understanding of their operations, customers, and market trends. Rather than being a luxury, this unified view is essential for businesses to compete effectively.

Think of data integration like assembling a jigsaw puzzle. Each individual piece of data holds value, but the complete picture only emerges when all the pieces connect. This comprehensive view enables more in-depth analysis and more informed business decisions.

Key Components of Effective Data Integration

Successful data integration hinges on several crucial components. Data extraction is the first step, gathering data from various sources, including legacy systems and cloud-based applications.

Next, data transformation standardizes the data into a consistent format. This ensures compatibility and accuracy, much like translating different languages into a common one.

Finally, data loading transfers the transformed data into a target system. This could be a data warehouse or a data lake, where the data can be accessed and analyzed.

The growing importance of data integration is reflected in the expanding market. Valued at USD 15.0 billion in 2023, the market is projected to reach USD 42.27 billion by 2032. This significant growth is fueled by factors like globalization, the increasing number of data sources, and a greater focus on data quality. For more detailed statistics, visit: https://www.skyquestt.com/report/data-integration-market

Evolution of the Data Integration Process

Data integration has evolved significantly. Early methods relied heavily on batch processing, transferring data in large chunks at scheduled times. While suitable for some situations, batch processing lacks the speed required by today's businesses.

As a result, real-time integration has become essential. This allows businesses to access and respond to data as it's generated. It’s like the difference between snail mail and instant messaging. You can learn how to automate data entry and save time as well.

Modern data integration increasingly uses API-based integration. This provides a flexible and scalable method for connecting diverse systems, enabling businesses to harness the full potential of their data for improved agility and informed decision-making.

Business Outcomes Beyond Technical Achievements

While the technical aspects are important, the true value of data integration lies in the business outcomes it enables. Effective data integration can:

Improve Decision-Making: A unified view of data provides valuable insights to guide strategic decisions.

Enhance Customer Experience: Integrating customer data from multiple sources allows businesses to personalize interactions and offer more targeted products and services.

Streamline Operations: Automating data workflows enhances efficiency and reduces manual effort.

Increase Revenue: By identifying trends and uncovering opportunities, data integration can drive revenue growth.

These benefits translate into a significant competitive advantage, enabling businesses to stay ahead in a dynamic market. As organizations continue to generate and collect data from various sources, mastering data integration fundamentals will be crucial for success.

Breaking Down the Data Integration Process

Beyond theoretical frameworks, practical data integration relies heavily on the Extract, Transform, Load (ETL) method. This structured approach manages data from various sources. Understanding core techniques is essential for mastering ETL fundamentals. Learn more with this in-depth look at Extract Transform Load (ETL).

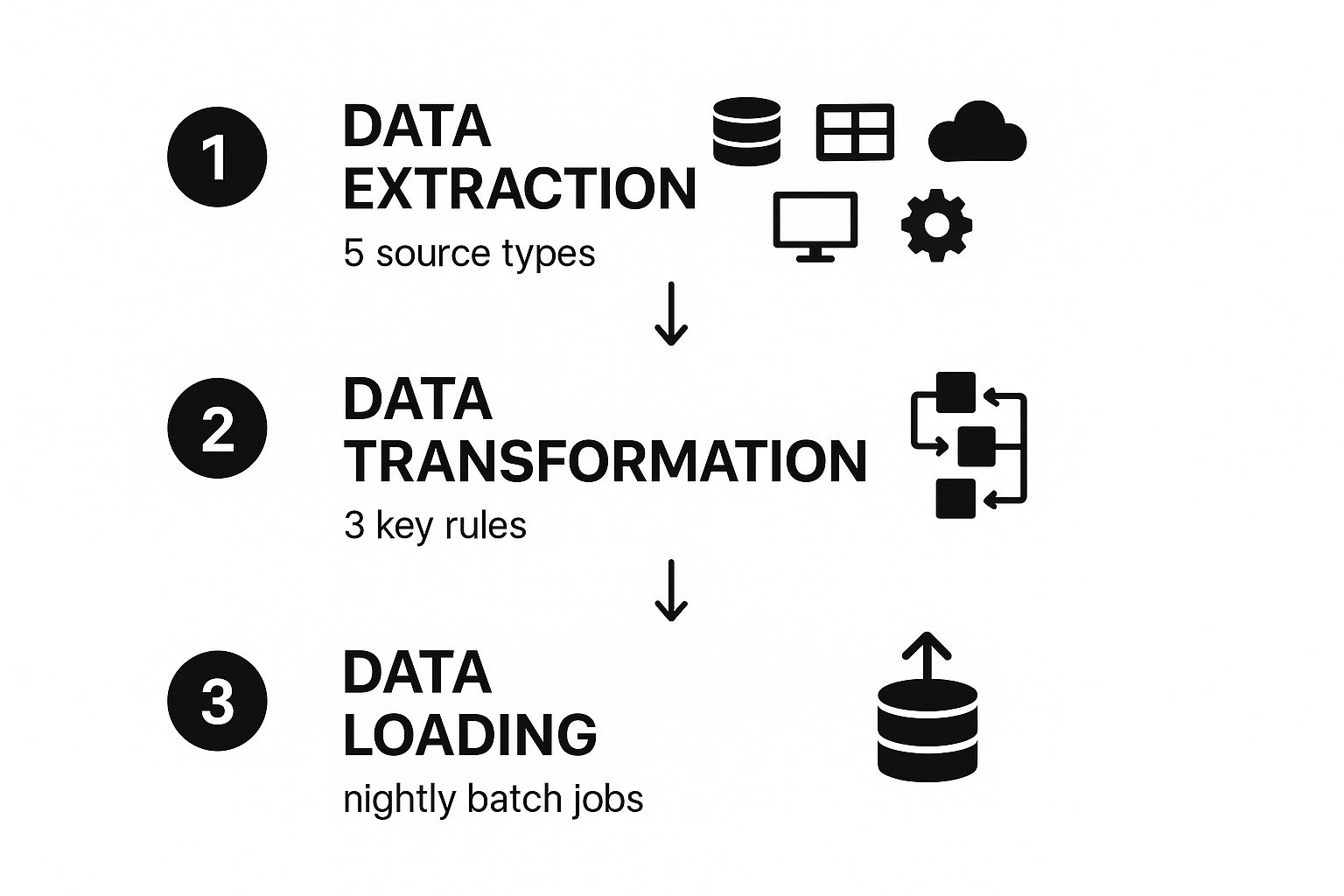

The following infographic visualizes a typical ETL workflow, illustrating the journey of data from extraction to transformation and finally, loading.

As shown, data is extracted from five different sources. It is then transformed according to three key rules and loaded via nightly batch jobs. This structured process ensures consistent data quality.

Understanding the ETL Process

The data integration process, driven by ETL, is critical for data-driven business decisions. The process begins with the extraction phase, gathering data from diverse sources such as databases, cloud applications, spreadsheets, and even IoT devices. Accessing all necessary data, regardless of origin, is key.

The next step is transformation. This phase converts the extracted data into a uniform format. It often involves cleaning, validating, and enriching the data, ensuring its quality and preparing it for analysis. This phase resolves inconsistencies like varied date formats or naming conventions.

Finally, the loading phase transfers the transformed data into a target system. This could be a data warehouse, data lake, or another database. This centralized repository makes data accessible for analysis and reporting. The frequency of data loading, from real-time updates to scheduled batch jobs, depends on business requirements.

Data Integration Approaches: Batch vs. Real-Time

Several data integration approaches exist, each with its advantages and disadvantages. Batch integration, a traditional method, processes data in large volumes at scheduled intervals. This is cost-effective for large datasets but may not suit time-sensitive applications.

Real-time integration, on the other hand, processes data as it’s generated, delivering immediate insights. This approach is crucial for applications like fraud detection or personalized recommendations, enabling businesses to respond instantly to changing conditions.

API-based integration connects systems and exchanges data using APIs. This approach provides flexibility and scalability, making it suitable for connecting cloud-based applications. Its popularity continues to grow alongside the rise of cloud computing.

To help illustrate the differences between these approaches, let's look at a comparison table. The table below highlights key differences and similarities between traditional batch integration and real-time integration, focusing on methodology, technology, and implementation challenges.

Process Step | Traditional Batch Integration | Real-time Integration | Key Considerations |

|---|---|---|---|

Data Extraction | Scheduled intervals from multiple sources | Continuous from various sources | Data volume, velocity, and variety |

Data Transformation | Standardized rules applied to large datasets | On-the-fly transformation as data arrives | Data quality and consistency |

Data Loading | Periodic loading into target system (e.g., data warehouse) | Immediate loading into target system (e.g., operational database) | Latency and system performance |

Latency | High (minutes to hours) | Low (milliseconds to seconds) | Time sensitivity of business needs |

Cost | Lower infrastructure costs | Higher infrastructure and maintenance costs | Budget and resources |

Complexity | Less complex to implement | More complex to implement and manage | Technical expertise and infrastructure |

Use Cases | Reporting, analytics, historical analysis | Fraud detection, real-time dashboards, personalized recommendations | Business requirements and objectives |

As this table clearly shows, the choice between batch and real-time integration hinges on factors like data volume, velocity, and the time sensitivity of business needs. While batch integration is generally less complex and cost-effective for large-scale data processing, real-time integration offers immediate insights crucial for time-sensitive applications.

By understanding these various approaches and the core ETL process, businesses can select the optimal strategy for their specific needs. This empowers organizations to fully leverage their data for a competitive edge.

Selecting The Right Integration Tools For Your Reality

Choosing the right data integration tools can be a daunting task. The key to success lies in focusing on your specific business needs, not just the latest bells and whistles. This means understanding your data volume, the complexity of your data sources, and your team's technical skills. Consider whether you're working with high-speed data streams or large batch files. Do you need real-time integration, or will batch processing suffice? These questions are essential in guiding your tool selection.

Key Evaluation Criteria For Integration Platforms

Choosing adaptable, maintainable, and cost-effective tools is the foundation of effective data integration. Adaptability is a tool's ability to handle evolving data sources and formats. Business needs change, and your integration tools should adapt with them.

Maintainability is essential for long-term success. A complex, difficult-to-manage tool can quickly become a roadblock. Prioritize tools that are easy to understand and maintain, even as your data landscape evolves. This minimizes reliance on specialized expertise and promotes smoother operations.

Finally, consider the total cost of ownership. This includes not just the initial price, but also ongoing maintenance, training, and support. A seemingly affordable tool could become expensive over time.

Traditional ETL Vs. Modern Cloud-Native Platforms

Many organizations are combining traditional ETL (Extract, Transform, Load) systems with modern cloud-native platforms. Traditional ETL tools excel at handling complex transformations and large data volumes. They can, however, be less agile and harder to scale.

Cloud-native platforms offer more flexibility and scalability. They may, however, lack the robust transformation features of traditional ETL systems. By combining these approaches, businesses create a practical solution that leverages the strengths of each. For instance, a company might use a cloud-native platform for real-time data intake and a traditional ETL tool like Informatica PowerCenter for complex batch processing.

To help you choose the right platform, let's look at a comparison of some popular options:

Introducing the Integration Platform Decision Guide – a comparison of leading data integration tools across essential capabilities, pricing, and ideal use cases to help you select the right solution.

Tool/Platform | Key Features | Best For | Pricing Model | Limitations |

|---|---|---|---|---|

Robust ETL, complex transformations, high-volume data | Large enterprises, complex data warehousing | Subscription-based | Less agile, can be complex to manage | |

High-throughput messaging, real-time streaming | Real-time data ingestion, streaming applications | Open-source | Requires expertise to manage, limited transformation capabilities | |

Cloud-native ETL, data orchestration, ELT | Cloud data warehouses, data lakes | Consumption-based | Can be costly for high data volumes | |

Automated data pipelines, pre-built connectors | Data analytics, business intelligence | Usage-based | Limited customization options |

This table highlights the key differences between several popular integration platforms. Choosing the right one depends on your specific needs and resources.

The data integration market is driven by the increasing adoption of cloud-based solutions and growing IT environment complexity. This is especially true in large enterprises, where data integration plays a vital role in strategic decision-making and daily operational efficiency. The market is expected to grow from USD 13.97 billion in 2024 to USD 15.22 billion in 2025, a CAGR of 9.0%. This growth underscores the increasing importance of data integration in enhancing customer experience, aligning with DevOps practices, and complying with data privacy regulations. Learn more about these market trends: https://www.thebusinessresearchcompany.com/report/data-integration-global-market-report

Matching Tools To Specific Integration Challenges

Different tools excel in different situations. Some are designed for high-volume, batch-oriented data integration, while others are better for real-time, API-driven integration. For example, Apache Kafka is excellent for streaming data.

Tool selection often involves trade-offs. A highly specialized tool may offer great performance for a particular task but lack the flexibility for other integration needs. A more general-purpose tool might be more versatile but less efficient for specific tasks.

Carefully consider your current and future needs when evaluating integration platforms. Engaging a consultancy specializing in process automation, such as Flow Genius, can provide helpful guidance. They can help you navigate the complex landscape of integration tools and develop a strategy that meets your business goals.

Overcoming Real-World Integration Obstacles

Data integration offers significant benefits, but it's not without its challenges. Even well-planned initiatives can hit roadblocks. This section explores common obstacles and offers practical strategies to overcome them.

Common Data Integration Challenges

Several recurring challenges can hinder data integration projects. One of the most common is inconsistent data formats. Data from different sources often arrives in various formats, making it difficult to combine. It's like trying to fit puzzle pieces from different sets together—they simply won't connect. Data in different formats requires standardization before integration.

Another challenge is schema evolution. As business needs change, your data structure (the schema) must adapt. Managing these changes without disrupting existing integrations requires careful planning and flexible tools.

Poor-quality source data presents another significant obstacle. If the data you're integrating is inaccurate or incomplete, the resulting integrated view will also be flawed. It's like baking a cake: if your ingredients are bad, the cake will be too. Addressing data quality issues at the source is crucial for successful integration.

Finally, organizational silos can impede integration efforts. Different departments often have their own data systems and processes, making it difficult to share information. Breaking down these silos and fostering collaboration is essential for effective data integration. You might be interested in: How to master efficient customer engagement embracing automation.

Strategies For Mitigation

Fortunately, practical solutions exist for each of these challenges. To address inconsistent data formats, establish clear data standards and implement data transformation processes. These processes convert data into a consistent format, much like translating different languages into a common one.

Schema evolution can be managed using version control for your data schemas and implementing flexible integration tools that adapt to changes. This allows for modifications without breaking connections between integrated systems.

Improving poor-quality source data requires implementing data quality rules and validation processes. This proactive approach identifies and corrects errors at the source, ensuring the integrity of the integrated data. Tools like OpenRefine can assist with this.

Breaking down organizational silos requires clear communication and collaboration between departments. Establishing a data governance framework can also help ensure everyone is on the same page regarding data management and integration.

Scaling Challenges and Solutions

As data volumes grow and integration points multiply, scalability becomes a major concern. Traditional integration methods may struggle to keep up with increasing demands, much like a single-lane road becoming congested with increasing traffic.

To address scaling challenges, consider adopting cloud-native integration platforms. These platforms offer greater flexibility and scalability, handling large data volumes and adapting to changing needs more easily than traditional systems.

Additionally, implementing automation can help streamline the data integration process and reduce manual effort. This frees up valuable resources and allows your team to focus on more strategic tasks.

By understanding these common obstacles and implementing appropriate mitigation strategies, organizations can navigate the complexities of data integration and unlock the full potential of their data. This sets the stage for a robust and effective integration strategy that delivers real business value.

Building an Integration Strategy That Actually Works

A successful data integration strategy goes beyond simply following best practices. It requires a focused alignment of the data integration process with specific business goals. This means prioritizing tangible business outcomes over purely technical considerations. Ultimately, this ensures the integrated data directly contributes to achieving strategic objectives.

Aligning Integration With Business Objectives

Many organizations make the mistake of prioritizing technical complexity over business value. However, a truly effective integration strategy begins with clearly defined business objectives. For instance, a retailer aiming to improve customer personalization might integrate data from various customer touchpoints. This clear objective then guides the entire integration process, ensuring relevance and impact.

This business-centric approach ensures that the integrated data provides actionable insights. These insights can then be used to enhance decision-making, streamline operations, and ultimately drive revenue growth. This direct link between data integration and business goals is crucial for maximizing ROI.

Establishing Effective Data Governance

Data governance is often viewed as a restrictive process, but it should be seen as an enabling function. A well-defined governance framework provides the necessary structure and guidelines for managing data throughout the integration process. This includes clearly defined roles and responsibilities, established data quality standards, and ensuring compliance with relevant regulations.

Effective data governance ensures data consistency and reliability, essential for accurate analysis and informed decision-making. It also promotes transparency and accountability, building trust in the integrated data. For a deeper dive into common integration challenges and how to overcome them, check out these tips on system integration challenges.

Designing a Scalable Architecture

A successful integration architecture must be built to accommodate future growth. As data volumes expand and new data sources emerge, the architecture should scale seamlessly. This requires careful planning and the selection of appropriate technologies.

This forward-thinking approach ensures the long-term effectiveness of the integration strategy. It also prevents costly and time-consuming rework down the line as the business evolves. Scalability is essential for maintaining performance and avoiding bottlenecks as data demands increase. You may also be interested in learning more about automated inventory management.

Structuring Integration Capabilities and Change Management

Building a sustainable integration capability requires a skilled team. This team should include data architects, integration specialists, and data analysts. These professionals possess the expertise to design, implement, and manage the integration process effectively.

Effective change management is also crucial for successful adoption. This involves communicating the benefits of the integrated system to stakeholders and providing appropriate training. This approach ensures buy-in and minimizes disruption during the transition. A well-structured team combined with effective change management creates the foundation for a successful and sustainable integration strategy. By focusing on business objectives, establishing strong governance, designing for scalability, and fostering a culture of change, organizations can develop data integration strategies that deliver real business value.

Industry-Specific Integration Challenges and Solutions

Data integration isn't a one-size-fits-all solution. What works for one industry might not work for another. Different sectors face unique challenges regarding regulations, data sources, and necessary integration patterns. Let's explore these industry-specific nuances and practical solutions.

Healthcare

The healthcare industry operates under strict regulations, particularly HIPAA, which governs patient data privacy and security. Any data integration process must prioritize these concerns. Healthcare data sources are diverse, from electronic health records (EHRs) to medical billing systems. Integrating this data requires specialized tools and expertise. The goal is a unified view of patient information, improving care coordination and outcomes. Imagine easily accessing a patient's complete medical history, lab results, and insurance information all in one place.

Financial Services

Financial institutions handle highly sensitive data under strict regulatory oversight. Real-time integration is critical for fraud detection and transaction processing, but it requires robust security. Data sources include transaction databases, customer relationship management (CRM) systems, and market data feeds. Effective integration enables faster decision-making, improves risk management, and enhances customer service. For instance, integrating transaction data with CRM systems provides a comprehensive view of customer activity for personalized advice and offers.

Manufacturing

Data integration is crucial for optimizing supply chains and improving production efficiency in manufacturing. Data sources range from inventory management systems to sensor data from factory equipment. Integrating this data helps identify bottlenecks, predict maintenance needs, and enhance product quality. Consider a car manufacturer integrating data from parts suppliers with its production schedule, enabling just-in-time delivery, minimizing inventory costs, and maximizing production efficiency.

Retail

Retailers need a unified customer view across all channels, from online stores to physical locations. This means integrating data from point-of-sale (POS) systems, e-commerce platforms, and customer loyalty programs. Effective integration enables personalized marketing, improves customer service, and optimizes inventory management. A clothing retailer could integrate online store data with its in-store POS system, offering personalized recommendations based on browsing history and purchase behavior.

The data integration market is constantly evolving, with North America holding the largest market share. However, the Asia Pacific region is expected to see the highest growth due to rapid technological advancement. You can explore this further: Data Integration Market Report

By understanding industry-specific challenges and solutions, businesses can develop effective data integration strategies. Working with an automation consultancy can be invaluable in navigating these complexities and creating custom solutions. They can offer expertise in designing and implementing integration workflows tailored to your specific business needs and regulatory requirements.

The Future of Data Integration: Beyond the Buzzwords

The data integration process is constantly changing. New technologies are emerging, promising to reshape how we approach integration. We're moving beyond simple data transfers and toward intelligent, automated systems. This section explores some key trends and their practical uses, not just their theoretical potential.

The Rise of AI and Machine Learning in Data Integration

Artificial intelligence (AI) and machine learning (ML) are making real impacts in data integration, moving beyond the hype. These technologies offer practical automation in several key areas.

Data Mapping: AI can automate the often tedious process of mapping data fields between different systems. This reduces manual work and speeds up integration projects. Imagine software automatically identifying matching fields between databases, even if their naming conventions differ.

Anomaly Detection: ML algorithms can find unusual data patterns and potential errors during integration. This leads to higher data quality and prevents costly mistakes. Picture a system flagging a sudden sales spike in a specific region, allowing you to investigate before it affects your business.

Workflow Optimization: AI can optimize integration workflows, automatically adjusting resources and processes for better efficiency. This means faster integration and lower operating costs.

Real-Time and Streaming Integration for Immediate Insights

Real-time and streaming integration are gaining traction, offering immediate business insights. This lets organizations quickly respond to changing market dynamics.

Real-time integration delivers data as it's created, enabling instant reactions.

Streaming integration handles continuous data streams from multiple sources, providing a constant flow of up-to-date information.

These methods are especially valuable in sectors like finance and e-commerce, where timely data is crucial for success.

Blockchain for Enhanced Data Provenance

Blockchain technology, known for its secure and transparent nature, is finding a place in data integration to ensure data provenance. This involves tracking the origin and movement of data throughout the integration process. The increased transparency builds trust and simplifies regulatory compliance.

Edge Computing: Reshaping Distributed Integration Architectures

Edge computing, which processes data closer to the source, is changing distributed integration architectures. This approach reduces latency and improves efficiency, particularly for real-time data applications. It also increases resilience by reducing reliance on a central server.

Preparing Your Integration Strategy for the Future

To get ready for these advancements, businesses should consider these steps:

Embrace Continuous Learning: Stay up-to-date on data integration trends.

Invest in Scalable Platforms: Flexible and scalable integration platforms help adapt to new technologies and increasing data volumes.

Develop AI/ML Expertise: AI and ML will play an increasingly important role in data integration.

Prioritize Data Governance: Ensure data quality and security as integration complexity increases.

By understanding and preparing for these trends, organizations can fully utilize the power of data integration in the future. Don't fall behind; start preparing your strategy today.

Ready to optimize your data integration and unlock your data's full potential? Contact Flow Genius today for a consultation: https://www.flowgenius.ai

Comments