- Sep 3, 2025

- 17 min read

Data migration is a critical, high-stakes initiative that can either propel a business forward or halt it in its tracks. With data volumes exploding and systems becoming more complex, a simple lift-and-shift approach is no longer viable. The difference between a seamless transition and a costly failure lies not just in a plan, but in a detailed blueprint grounded in proven data migration best practices. A solid blueprint ensures every step, from initial assessment to final cutover, is meticulously managed, automated where possible, and validated for accuracy.

This guide moves beyond generic advice to provide that comprehensive blueprint. We will cover the entire migration lifecycle, focusing on actionable strategies for deep-dive data assessment, phased execution with pilot testing, and robust post-migration optimization. For infrastructure project managers, technology teams, or any business leader looking to automate complex CRM workflows using tools like Zapier or Make.com, understanding these principles is the first step toward achieving operational excellence. This article outlines eight essential practices designed to minimize risk, ensure data integrity, and maximize the return on your technology investment.

Whether you are moving data to a new on-premise system or into a cloud environment, these foundational steps are universal. To build upon the foundation of your data migration blueprint, reviewing these essential cloud migration best practices will help ensure a seamless transition. Let's start building your blueprint for a flawless data migration.

1. Comprehensive Data Assessment and Profiling

One of the most critical, yet often underestimated, data migration best practices is conducting a thorough assessment and profiling of your source data before a single byte is moved. This foundational step involves a deep-dive analysis into the current state of your data to understand its structure, quality, volume, and interdependencies. Neglecting this phase is like building a house without surveying the land first; you are almost certain to encounter costly and time-consuming problems down the line.

Comprehensive data assessment goes beyond a simple inventory. It requires you to meticulously evaluate the data for accuracy, completeness, and consistency. By identifying anomalies, duplicates, and outdated information early, you can develop a targeted cleansing strategy. This proactive approach ensures that you migrate only clean, relevant, and valuable data to the new system, preventing the "garbage in, garbage out" phenomenon that plagues many migration projects.

Why Data Profiling is Non-Negotiable

The core goal is to create a detailed blueprint of your data landscape. This informs every subsequent decision, from selecting the right migration tools and strategy (like a big bang or phased approach) to accurately forecasting timelines and resource allocation. Companies like Spotify, during their migration to Google Cloud Platform, invested heavily in analyzing music metadata and user preference data. This initial profiling enabled them to design a target architecture that could handle the complexity and scale of their datasets, ensuring a smooth transition with minimal disruption to user experience.

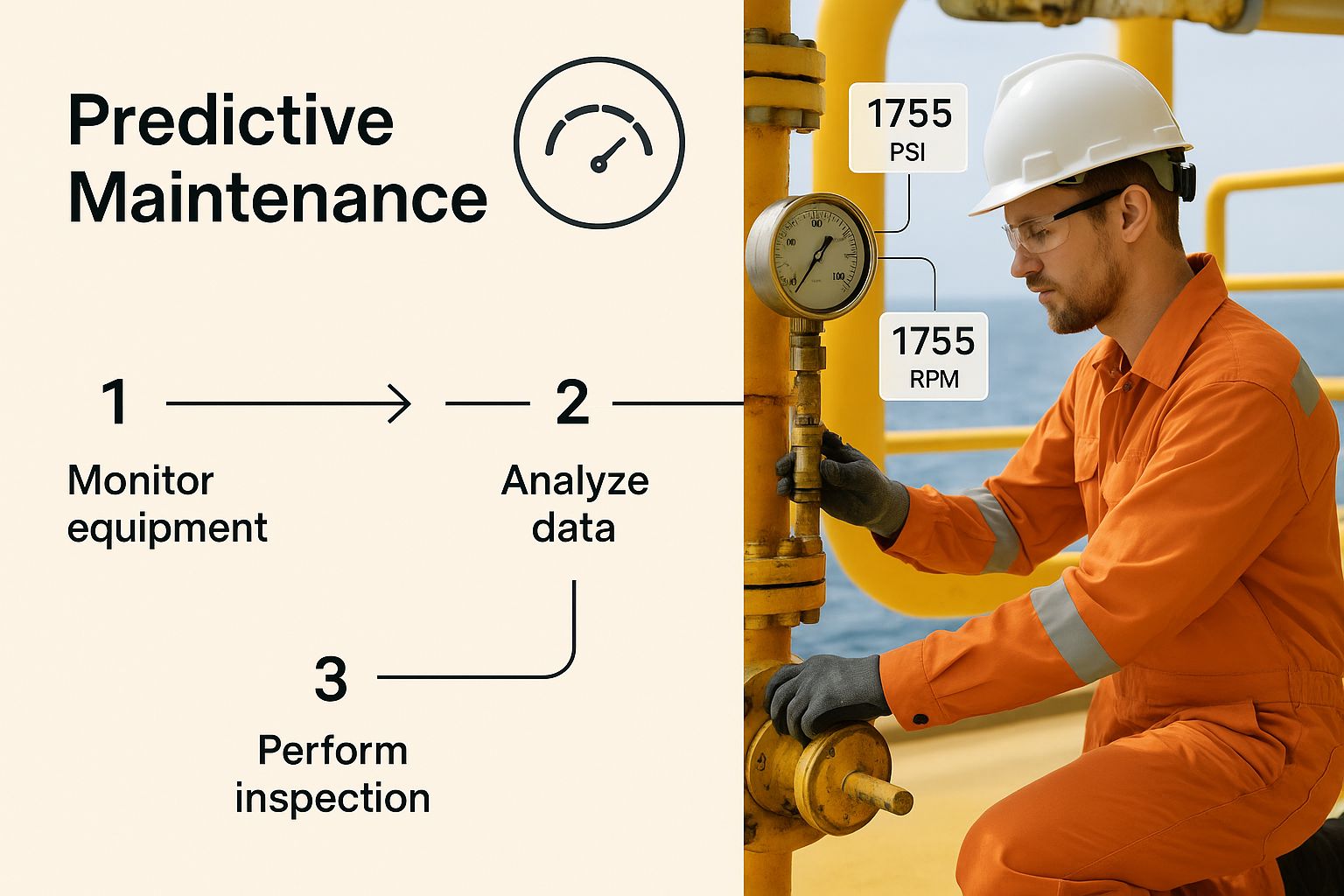

The following infographic illustrates the core, sequential workflow of a proper data assessment.

This process flow highlights how each step logically builds upon the last, moving from granular quality checks to a holistic view of data relationships.

Actionable Tips for Implementation

To execute a successful data assessment, consider these practical steps:

Utilize Automation: Leverage automated data profiling tools like Talend or Informatica Data Quality. These tools can rapidly scan vast datasets to identify patterns, inconsistencies, and null values, saving countless hours of manual effort.

Document Everything: Create a comprehensive data dictionary and document all findings. This documentation becomes an invaluable asset for the migration team and for future compliance and governance efforts. Effective data handling is a key component of a larger strategy; you can explore more insights on the 9 best practices for data management in 2025.

Involve Business Stakeholders: Data is a business asset. Involve department heads and subject matter experts in the validation process. They can provide crucial context on data definitions, business rules, and quality thresholds that technical teams might overlook.

2. Phased Migration Strategy with Pilot Testing

Instead of attempting a high-risk, all-at-once "big bang" migration, a more prudent approach is to adopt a phased strategy that includes pilot testing. This methodical best practice involves breaking down the complex migration project into smaller, more manageable stages. Each stage is executed and validated sequentially, allowing teams to learn, adapt, and mitigate risks before moving on to more critical datasets.

This iterative process systematically reduces uncertainty and builds momentum. By starting with a low-risk, representative dataset for a pilot test, you create a safe environment to refine your migration processes, tools, and rollback procedures. This ensures that by the time you migrate mission-critical systems, your team has a battle-tested and reliable playbook, minimizing the potential for costly downtime or data loss.

Why an Iterative Approach is a Game-Changer

The core objective of a phased migration is to transform a massive, daunting project into a series of predictable, controlled steps. This approach provides invaluable opportunities for course correction. For instance, during its extensive migration to Azure, Microsoft moved its internal services in carefully planned waves. This allowed them to identify and resolve unforeseen technical challenges on smaller internal systems before applying those lessons to global, customer-facing platforms like Office 365, ensuring a stable transition.

Similarly, Amazon Web Services (AWS) champions this method in its cloud migration frameworks. They advocate for starting with pilot applications to demonstrate value and prove out the technology and processes. This creates a feedback loop that continuously improves the migration strategy for subsequent, more complex workloads, making it a cornerstone of modern data migration best practices.

Actionable Tips for Implementation

To successfully execute a phased migration with pilot testing, consider these practical steps:

Select a Representative Pilot: Choose a dataset or application for your initial pilot that is complex enough to be a meaningful test but not so critical that a failure would disrupt core business operations.

Define Success Criteria: For each phase, establish clear, measurable Key Performance Indicators (KPIs) for success. This could include data accuracy rates, system performance benchmarks, and user acceptance testing scores.

Document and Debrief: After each phase, conduct a thorough post-mortem to document what worked, what didn't, and why. This "lessons learned" documentation is crucial for refining the process for subsequent phases.

Test Your Rollback Plan: A key advantage of a phased approach is the ability to test your contingency plans. Ensure that for each phase, you have a fully tested and verified procedure to roll back to the source system if necessary.

3. Robust Data Validation and Testing Framework

Migrating data without a rigorous validation and testing framework is akin to sailing a ship in a storm without navigation tools. One of the most critical data migration best practices involves establishing a comprehensive methodology to verify data integrity, accuracy, and completeness at every stage. This framework ensures that the data in the target system is a precise, reliable reflection of the source, preventing data corruption, loss, and costly post-migration fixes.

This process moves beyond simple record counts. It requires a multi-layered testing strategy that includes everything from unit testing individual data fields and transformations to full-scale user acceptance testing (UAT). By implementing automated scripts and reconciliation processes, you create a safety net that catches errors before they can impact business operations, ensuring the new system performs as expected with trustworthy data.

Why a Testing Framework is Non-Negotiable

The ultimate goal of a data validation framework is to build confidence and mitigate risk. It provides quantifiable proof that the migration has met all business and technical requirements. For high-stakes environments, this is indispensable. For instance, when JPMorgan Chase executed its cloud migration, it relied on a powerful testing framework to validate over $6 trillion in daily transactions, ensuring zero data loss or corruption. Similarly, Deutsche Bank's regulatory data migrations required extensive testing to meet strict compliance mandates, where even minor discrepancies could lead to significant penalties.

This structured approach transforms testing from a final-stage checkbox exercise into an integrated, continuous quality assurance process. It’s the only way to guarantee that critical business rules, data relationships, and complex transformations have been executed correctly, thereby protecting the integrity of your most valuable asset.

Actionable Tips for Implementation

To build and execute an effective data validation and testing framework, consider these practical steps:

Automate Extensively: Use specialized data testing tools like QuerySurge or Datagaps’ ETLValidator to automate validation scripts. Automation ensures consistent, repeatable, and scalable testing, which is impossible to achieve manually with large datasets.

Establish Clear Pass/Fail Criteria: Before testing begins, define and agree upon precise metrics for what constitutes a successful test. This includes acceptable thresholds for data discrepancies, performance benchmarks, and business rule validation.

Involve Business Users: Engage subject matter experts and business stakeholders in user acceptance testing (UAT). They are uniquely qualified to validate critical business logic and confirm that the migrated data supports their operational workflows and reporting needs.

Document All Test Results: Maintain a detailed log of all test cases, scripts, results, and issues. This documentation is crucial for audit trails, troubleshooting, and demonstrating compliance to stakeholders and regulatory bodies.

4. Comprehensive Backup and Recovery Planning

A critical data migration best practice that serves as an indispensable safety net is establishing a robust backup and recovery plan. This strategy involves more than simply creating a copy of the source data; it is a comprehensive approach to protecting data integrity and ensuring business continuity throughout the entire migration lifecycle. A well-designed plan provides a clear, tested mechanism to restore data or roll back the entire project in the event of failure, corruption, or unforeseen errors.

Executing a migration without this safety net is a high-stakes gamble. The plan must encompass point-in-time backups, clearly documented recovery procedures, and validated rollback mechanisms. By preparing for potential setbacks, you empower your team to act decisively and minimize downtime. This proactive approach transforms a potential disaster into a manageable incident, safeguarding your most valuable asset: your data.

Why a Recovery Plan is Your Ultimate Insurance Policy

The core goal of this practice is to ensure that no matter what goes wrong during the transition, you have a reliable path back to a stable, known-good state. This plan builds confidence among stakeholders and provides the technical team with a clear course of action under pressure. During its complex core banking system migration, the Commonwealth Bank of Australia implemented multiple tiers of backup and rigorously tested recovery scenarios. This preparation was crucial in ensuring a seamless transition for millions of customers, as it guaranteed the bank could revert to the previous system instantly if any critical issues arose.

This planning prevents irreversible data loss and catastrophic project failure, making it a non-negotiable component of any serious migration effort.

Actionable Tips for Implementation

To build a resilient backup and recovery strategy, consider these practical steps:

Test Recovery Procedures Relentlessly: Before starting the migration, conduct full-scale tests of your recovery procedures. Simulate various failure scenarios to validate that you can restore data accurately and within the required timeframes. A plan that hasn't been tested is merely a theory.

Maintain Geographically Redundant Backups: Store multiple copies of your backups in different physical or cloud locations. This strategy protects against site-specific disasters and ensures data availability even if your primary data center goes offline.

Document Every Step: Create detailed, step-by-step documentation for all recovery and rollback procedures. This guide should be clear enough for any member of the technical team to follow during a high-stress situation, minimizing human error. Leading providers like Veeam and Commvault offer solutions that help automate and document these critical workflows.

Establish Clear Backup Retention Policies: Define how long each backup will be stored based on business needs and regulatory compliance. This ensures you have access to necessary historical data without incurring excessive storage costs.

5. Data Security and Compliance Management

Ensuring robust data security and maintaining regulatory compliance are not optional extras; they are foundational pillars of a successful data migration strategy. This practice involves embedding security protocols and compliance checks into every phase of the project, from initial planning to post-migration validation. It encompasses encryption of data in transit and at rest, strict access controls, and adherence to regulations like GDPR, HIPAA, or SOX. Overlooking this is a high-stakes gamble that can lead to severe data breaches, hefty fines, and irreparable brand damage.

A comprehensive approach to security and compliance management ensures that sensitive information is protected from unauthorized access or exposure throughout its journey. This proactive stance mitigates risks associated with moving data between different environments, whether on-premises or in the cloud. By integrating security measures directly into the migration workflow, you build a resilient process that safeguards your most valuable asset while meeting your legal and ethical obligations. This is a critical component of modern data migration best practices.

Why Security and Compliance are Non-Negotiable

The core goal is to treat data protection as an integral part of the migration, not an afterthought. This mindset informs tool selection, team training, and the overall migration architecture. For instance, when the Mayo Clinic migrated patient records to new cloud infrastructure, maintaining HIPAA compliance was a primary driver of the project's design. This required implementing end-to-end encryption and granular access controls to ensure that protected health information (PHI) remained secure at all times. Similarly, financial institutions like Goldman Sachs must ensure SOX compliance during systems migration to guarantee the integrity of financial reporting data, making audit trails and data lineage essential.

This focus ensures that the migration not only succeeds technically but also reinforces the organization's security posture and trustworthiness. It prevents the project from becoming a source of major organizational risk.

Actionable Tips for Implementation

To execute a secure and compliant data migration, consider these practical steps:

Involve Security Teams Early: Engage your security and compliance teams from the very beginning of the planning phase. Their expertise is crucial for identifying potential risks and defining security requirements for the target environment.

Classify and Encrypt Data: Before migration, implement a data classification scheme to identify sensitive information. Use strong encryption or tokenization for this data both in transit (using protocols like TLS) and at rest in the target system.

Maintain Detailed Audit Trails: Implement comprehensive logging and monitoring to create an immutable audit trail of all migration activities. This is non-negotiable for compliance and essential for forensic analysis if a security incident occurs.

Enforce the Principle of Least Privilege: Configure access controls so that team members and migration tools have only the minimum permissions necessary to perform their tasks. Regularly review and update these permissions throughout the project lifecycle.

6. Performance Optimization and Monitoring

A crucial yet technically demanding data migration best practice is to systematically optimize and monitor performance throughout the entire project lifecycle. This involves more than just hitting the "start" button and hoping for the best; it's a proactive strategy to manage resources, tune transfer speeds, and prevent bottlenecks. Failing to focus on performance can turn a well-planned migration into a slow, disruptive, and costly ordeal that misses critical business deadlines.

This systematic approach involves establishing performance baselines, then using techniques like parallel processing and load balancing to maximize throughput. Continuous monitoring of network bandwidth, CPU usage, and I/O on both source and target systems is essential. This allows your team to identify and resolve performance degradation in real-time, ensuring the migration proceeds at an optimal pace without impacting ongoing business operations.

Why Continuous Tuning is Non-Negotiable

The core goal is to complete the migration as quickly and efficiently as possible while minimizing the impact on production systems and end-users. This informs the technical execution plan, helping to fine-tune resource allocation, schedule high-intensity tasks for off-peak hours, and validate the scalability of the target environment. For instance, during its migration to Azure, LinkedIn implemented extensive performance optimization, which resulted in a 50% reduction in total migration time. Similarly, eBay’s migration of its massive PayPal database leveraged parallel processing to move over 100TB with minimal downtime.

By treating performance as a key project metric, you transform the migration from a potential system strain into a highly controlled and efficient process. This ensures the project stays on schedule and within budget.

Actionable Tips for Implementation

To execute a high-performance data migration, consider these practical steps:

Establish Performance Baselines: Before the migration, measure the performance metrics of your source and target systems under normal load. This baseline is critical for identifying performance degradation during the migration process.

Leverage Parallel Processing: For large datasets, divide the data into smaller chunks and migrate them simultaneously using multiple threads or processes. This dramatically reduces the overall migration time compared to a single-threaded approach.

Monitor and Manage Resources: Use performance monitoring tools like DataDog or New Relic to track network bandwidth, system CPU, and memory utilization in real-time. This helps you quickly identify and address bottlenecks as they occur. Tracking the right metrics is key to success; you can learn more about the 7 key operational efficiency metrics to track in 2025.

Use Data Compression: Compressing data before transferring it over the network can significantly reduce transfer times, especially in environments with limited bandwidth. Ensure the target system can efficiently decompress the data upon arrival.

7. Change Management and User Training

A technically flawless data migration can still fail if the people who rely on the new system are unprepared or resistant to change. This is why a structured approach to managing the human side of the transition is one of the most vital data migration best practices. Change management addresses stakeholder communication, user training, and organizational readiness, ensuring smooth adoption of the new systems and processes post-migration.

Neglecting this element means risking low user adoption, decreased productivity, and a poor return on investment. The migration project isn't complete when the data is moved; it's complete when users are effectively leveraging the new system to perform their jobs. A proactive change management plan transforms potential friction into enthusiastic adoption, securing the long-term success of the entire initiative.

Why the Human Element is Non-Negotiable

The core goal of change management is to guide your organization and its employees from the current state to the desired future state with minimal disruption. It involves building awareness, creating desire, and providing the knowledge and ability to adapt. Companies like Procter & Gamble, during their massive SAP migration, recognized this and implemented comprehensive training programs for over 140,000 employees worldwide. This focus on the human element ensured that the new system was not just implemented but embraced, leading to standardized processes and greater operational efficiency.

This structured approach, popularized by frameworks like Prosci's ADKAR model and Kotter's 8-Step Process for Leading Change, provides a roadmap for navigating the psychological and operational shifts inherent in a data migration.

Actionable Tips for Implementation

To effectively manage the human side of your migration, integrate these practical steps into your project plan:

Communicate Early and Often: Start communication long before the migration begins. Use multiple channels to share the "why" behind the project, the expected benefits, and the timeline. Regular updates prevent rumors and build trust.

Identify and Engage Champions: Pinpoint key influencers and enthusiastic employees within different departments to act as champions for the project. Their peer-to-peer advocacy is often more effective than top-down communication.

Provide Role-Specific Training: Generic training is ineffective. Develop tailored training modules that address the specific workflows, tasks, and needs of different user roles. This makes the learning process relevant and immediately applicable.

Establish Feedback Channels: Create accessible avenues for users to ask questions, report issues, and provide feedback. Responding promptly shows that their concerns are valued and helps you identify and resolve adoption barriers quickly.

8. Detailed Documentation and Knowledge Transfer

A successful data migration project does not end when the final byte of data is transferred; its long-term value is secured through meticulous documentation and effective knowledge transfer. This practice involves creating a comprehensive and accessible record of the entire migration process, from initial planning and data mapping to execution scripts, issue resolutions, and final validation reports. Neglecting this crucial step creates a knowledge silo, leaving your team vulnerable during future system updates, audits, or staff transitions.

This comprehensive approach ensures that the "why" and "how" behind every decision are preserved. It transforms a one-time project into a reusable, institutional asset. By documenting everything, you create a detailed playbook that demystifies the new system's data architecture and operational nuances for both current and future team members. This is a core pillar of effective data migration best practices, preventing critical insights from being lost once the project team disbands.

Why Documentation is a Project Deliverable

The goal is to build a living knowledge base, not a static archive. This repository becomes the single source of truth for system maintenance, troubleshooting, and future enhancements. Companies like Amazon and Google have institutionalized this, creating extensive migration playbooks and runbooks. These documents are not just post-project summaries; they are actively used and refined, enabling teams to replicate successful migrations with predictable outcomes and avoid repeating past mistakes. This documented history is invaluable for training new employees and ensuring business continuity.

The process of creating this documentation should be integrated into the project lifecycle from day one, not treated as an afterthought. It ensures all critical information is captured accurately and in real-time.

Actionable Tips for Implementation

To build a robust documentation and knowledge transfer process, consider these practical steps:

Establish Standards Early: Before the project begins, define documentation templates and standards for everything from data mapping spreadsheets to error-handling procedures. Consistency is key to usability.

Use Collaborative Platforms: Leverage tools like Confluence, SharePoint, or Notion to create a centralized, searchable knowledge base. These platforms support real-time collaboration, version control, and rich media like diagrams and screenshots.

Document Successes and Failures: A complete record includes not only what worked but also what went wrong and why. Documenting roadblocks, bugs, and their resolutions provides powerful lessons for future projects and helps teams troubleshoot similar issues faster. This is fundamental to creating standard operating procedures that actually work.

Schedule Formal Knowledge Transfer: Organize dedicated sessions to walk the permanent operations and maintenance teams through the documentation. This interactive process allows them to ask questions and gain hands-on understanding of the new system, ensuring a smooth handover.

Best Practices Comparison for Data Migration

Practice | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

Comprehensive Data Assessment and Profiling | High complexity; requires specialized tools and expertise | High; time-intensive and skilled personnel | Identifies data quality issues, reduces risks, informs strategy | Large, complex datasets; early migration phases | Reduces surprises, optimizes system design |

Phased Migration Strategy with Pilot Testing | Moderate complexity; manages multiple environments | Moderate; requires infrastructure for phases | Mitigates risk, enables adjustments between phases | Large-scale migrations with critical systems | Reduces risk, supports continuous learning |

Robust Data Validation and Testing Framework | High complexity; custom scripting and multi-level tests | High; automated tools and testing resources | Ensures data integrity, reduces post-migration issues | Compliance-heavy environments; complex data structures | Builds confidence, early error detection |

Comprehensive Backup and Recovery Planning | Moderate complexity; setup and testing required | High; storage and backup infrastructure | Ensures data protection, quick recovery from failures | Any migration with high availability requirements | Maintains continuity, reduces risk of data loss |

Data Security and Compliance Management | High complexity; involves specialized security expertise | High; security tools and compliance audits | Protects sensitive data, ensures regulatory compliance | Regulated industries; sensitive or personal data | Reduces legal risk, builds stakeholder trust |

Performance Optimization and Monitoring | Moderate complexity; requires monitoring and tuning | Moderate to high; monitoring tools and infrastructure | Improves speed, reduces downtime, predicts timelines | Large volumes, time-sensitive migrations | Speeds migration, optimizes resource use |

Change Management and User Training | Moderate complexity; coordination across stakeholders | Moderate; training programs and communications | Increases adoption, reduces support issues | Organizations with many end-users or complex systems | Ensures user readiness, minimizes disruption |

Detailed Documentation and Knowledge Transfer | Moderate complexity; ongoing effort required | Moderate; collaborative tools and time | Preserves knowledge, supports future maintenance | Complex projects needing audit trails or multiple teams | Speeds future troubleshooting, supports compliance |

From Blueprint to Reality: Automating Your Data Migration Success

Navigating the complexities of data migration is a journey that transforms a meticulously crafted blueprint into a tangible business reality. We've explored the eight pillars of this process, from the foundational necessity of Comprehensive Data Assessment and Profiling to the final, crucial step of Detailed Documentation and Knowledge Transfer. Each of these practices represents a critical checkpoint, ensuring that your data’s journey from one system to another is secure, efficient, and complete. Following these steps methodically provides a strong defense against common pitfalls like data loss, extended downtime, and budget overruns.

However, viewing these practices merely as a sequential checklist limits their potential. The true mastery of a modern data migration strategy lies in understanding them as an interconnected framework. For instance, a robust Data Validation and Testing Framework is only as effective as the initial assessment that defines its parameters. Similarly, a Phased Migration Strategy relies heavily on a comprehensive Backup and Recovery Plan to mitigate risks during each incremental move. It’s this holistic perspective that elevates a project from a simple technical task to a strategic business initiative.

The True Differentiator: Intelligent Automation

While manual diligence and expert oversight are non-negotiable, the genuine competitive advantage in today's landscape is unlocked through automation. The principles we've discussed are the "what" of a successful project; automation is the "how" that makes it scalable, repeatable, and remarkably efficient. Think beyond simple data transfer scripts and consider the broader workflow:

Automated Validation: Instead of relying solely on manual spot-checks, implement automated scripts that compare data checksums, record counts, and field-level values between the source and target systems in real-time. This provides immediate feedback and allows for rapid remediation.

Intelligent Monitoring: Set up automated alerts that trigger based on performance degradation, API error rates, or unusual data latency. This proactive approach to Performance Optimization and Monitoring allows your team to address issues before they impact end-users.

Streamlined Rollbacks: A key component of your recovery plan can be automated. A well-designed workflow can trigger a "one-click" rollback procedure that reverts to the last stable state if validation checks fail or critical errors occur, dramatically reducing Mean Time to Recovery (MTTR).

By weaving automation into the fabric of your data migration plan, you transform a high-stress, high-risk event into a controlled and predictable process. This is the essence of modern data migration best practices: leveraging technology not just to move data, but to do so with intelligence, precision, and minimal human intervention.

Beyond Migration: A Foundation for Future Growth

Ultimately, a successful data migration is not the finish line. It is the beginning of a new chapter for your organization, one where data is more accessible, reliable, and powerful. By adhering to these best practices, you are not just ensuring a smooth transition; you are building a stronger foundation for future analytics, innovation, and growth. Your new system will be free of legacy clutter, your data will be clean and validated, and your teams will be empowered with the knowledge and tools they need to succeed. This strategic approach ensures the immense effort invested in the migration pays dividends long after the project is complete, turning a necessary technical upgrade into a lasting competitive advantage.

Ready to move beyond manual processes and implement a truly automated data strategy? At Flow Genius, we specialize in designing and implementing the custom automation workflows that turn data migration best practices into an efficient, repeatable reality. Visit Flow Genius to learn how our Data Advisory and implementation services can transform your complex migration into a streamlined engine for business growth.